Page 1 of 1

Training Speed on Multi-GPU

Posted: Mon Oct 12, 2020 10:44 pm

by dheinz70

Also I noticed this the other day.

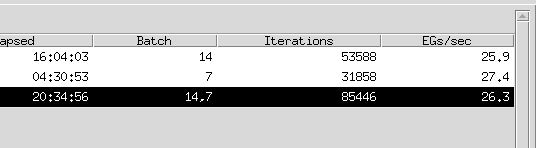

Distributed with a batch of 14, and only gpu1 with a batch of 7.

Shouldn't the distributed batch of 14 have roughly 2x the EG/s of the single gpu with a batch of 7?

- Screenshot from 2020-10-12 17-39-26.png (2.61 KiB) Viewed 2063 times

Re: Log and graph weirdness

Posted: Wed Oct 14, 2020 9:58 am

by torzdf

You get speed up by increasing your batchsize.

The same batchsize on a single GPU or multi GPUs is likely to run at about the same speed, or maybe slightly slower.

EDIT

Misread your message. I don't have a multi-gpu setup, so can't compare, and don't know where the "sweet-spot" is. Hopefully someone who does have a multi-gpu setup can offer some insight.