NB: This guide was correct at time of writing, but things do change. I will try to keep it updated.

Last edited: 15 Apr 2024

Contents

- Contents

- Introduction

- Why Extract?

- Extracting

- Sorting

- Clean the Alignments File

- Manually Fix Alignments

- Extracting a training set from an alignments file

- Re-inserting filtered out faces into an alignments file

Introduction

A lot of people get overwhelmed when they start out Faceswapping, and many mistakes are made. Mistakes are good. It's how we learn, but it can sometimes help to have a bit of an understanding of the processes involved before diving in.

In this post I will detail a workflow for Extraction. I'm not saying that this is the best workflow, or what you should do, but it works for me, and will hopefully give you a good jump off point for creating your own workflow.

I will be using the GUI for this guide, but the premise is exactly the same for the cli (all the options present in the GUI are availablle in the cli).

Why Extract?

At the highest level, extraction consists of three phases: detection, alignment and mask generation:

- Detection - The process of finding faces within a frame. The detector scans an image and selects areas of the image that it thinks are faces.

- Alignment - Finding the "landmarks" (explained later) within a face and orienting the faces consistently. This takes the candidates from the detector, and tries to work out where the key features (eyes, nose etc.) are on the potential face. It then attempts to use this information to align the face.

- Mask Generation - Identify the parts of an aligned face that contains face and block out those areas that contain background/obstructions.

There are several plugins for each of these. Their pros and cons are detailed in the tooltips (for the GUI) or the help text (for the cli), so I won't be covering specifics.

Extracting serves 2 main purposes:

- Training: To generate a set of faces for training. These faces will also contain the alignment information and masks which are required for training the model. This information is saved in the .png metadata of the extracted face.

- Converting: To generate an alignments file and mask for converting your final frames. The alignment file contains information about where each face is in each frame so that the conversion process knows where to swap faces for any given frame. When generating an alignments file for converting, then the extracted faces are not actually required, but they are useful for cleaning the alignments file (more on this later).

There is technically a 3rd purpose which is when you are extracting for converting and want some of these faces for training as well. I will also cover this off.

Whilst the extracted faces are not required for convert (only the alignments file is), it is useful to have them so that we can clean up our dataset for the conversion process. Similarly, if extracting faces for a training set, then the original alignments file is not actually required. However, it is useful to keep the alignments file, along with the original source, as it is the "master" document, and allows you to re-extract faces from the source directly from the information contained within the alignments file.

The Alignments File

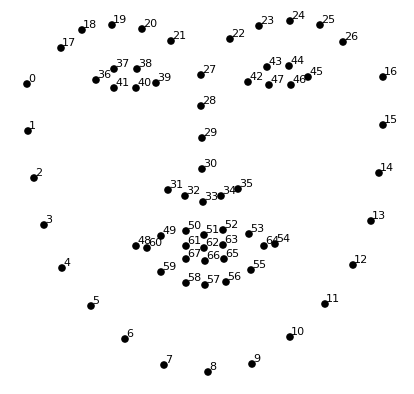

The alignments file holds information about all the faces it finds in each frame, specifically where the face is, and where the 68 point landmarks are:

It also stores any masks that have been extracted for each face.

Do not underestimate the importance of the alignments file. It is the master document that contains information about the location of faces and any associated information for each face for a given source. Having a clean and up to date alignments file can save you time in the future, as you can easily re-generate face sets straight from the file.

The alignments file specifically holds the following information:

- The location of each face in each frame

- The 68 point landmarks of each face in each frame

- The "components" and "extended" mask for each frame

- Any other user generated masks

- A small jpg thumbnail of the aligned face (used for the manual tool)

So now we know why we need to extract faces, how do we nail down a good workflow?

Extracting

The first step, whatever your reason for creating a faceset is to actually extract the faces from the frames.

Extract Configuration Settings

It is worth reviewing the Extract Configuration settings first to familiarize yourself with them. If this is the first time you have used Faceswap then it is unlikely that you are going to need to change anything here, but the options are covered for future reference.

To access the configuration settings select Settings > Configure Plugins... from the menu bar or hit the shortcut button to jump you straight to the correct settings:

This will bring up the following window. Settings for each of the extraction plugins are displayed on the left hand side. You can click into them and read the tooltips to see what each of them do. I won't cover the individual plugins, we will just focus on the options in the Global options page:

- Settings

These are options that apply globally across all plugins- Allow Growth: [Nvidia Only] Only enable this if you receive persistent errors that cuDNN couldn't be initialized. This happens to some people with no real pattern. This option allocates VRAM as it is required, rather than allocating all at once. Whilst this is safer, it can lead to VRAM fragmentation, so leave it off unless you need it.

- Filters

Faceswap has a number of filters built in to try to discard obvious false-positives from the extraction output. That is, any image that can be discarded as it clearly is not a face. All of the defaults are set to fairly loose values, to avoid false-negatives. Any filter can be disabled by setting the value to 0.- Aligner Min Scale - Aligned faces which are unusually small compared to the size of frame that it is being extracted from tend to be false-positives, so these can be discarded. The number provided here is multiplied by the smallest side of the frame (so for a 1920x1080 image, this would be 1080. For a 720x1280 image, this would be 720 etc.) Generally the default value here will be fine, but if you have frames where the face does not take up much of the frame at all, you may want to lower this, or disable the filter altogether.

- Aligner Max Scale - Similarly to min-scale, aligned faces which are unusually large compared to the original frame tend to be false-positives, so can be discarded. The value here works the same way as with min-scale (i.e. it is multiplied by the smallest edge of the frame). Again, the default value will mostly be fine, but if you are extracting from sources that are super-zoomed in on the face, then you may want to adjust this up to capture these face, or disable the filter altogether.

- Aligner Distance - The distance is calculated by how far the detected face landmark points deviate from an 'average' face. Values above 15 will remove obvious false-positives, but will probably still allow a fair number of images that are not a face through, however setting this to lower values (around 10) is likely to also remove more extreme angles, which generally is not desirable. The closer to 0 this is set, the more faces will be filtered out that are not looking straight towards the camera.

- Aligner Roll - Roll is how much a head is tilted left to right. In theory aligned faces should have a roll close to zero (ie: the eyes should be close to level in the aligned image). However, extreme profiles tend to have an issue with calculating roll correctly, so lowering this value below the default will see more extreme profiles discarded. If you have a lot of profiles in the source you are extracting from, you may want to disable this filter

- Aligner Features - This filter looks at the location of the lowest eye/eyebrow point in the aligned landmarks and compares it to the highest mouth point. In aligned images eyes should always be located above the mouth, so any face where this does not hold true is misaligned and will be discarded. This filter is fairly safe to always leave enabled.

- Filter Refeeds - If the re-feed option has been selected during extraction, then any of the interim alignments which are found to fail the aligner-filter test during each re-feed will be filtered out prior to averaging the final result. This can be useful for a couple of reasons.

- It removes potential outliers/misaligns from contributing towards the final aligned image, which should lead to better alignments

- It can also sometimes find alignments which would not normally be found. EG: for a difficult face and a re-feed value of 7, it is possible that it only finds valid alignments in 1 of the re-feeds, with the other 7 failing the filter test. In this instance and Filter Refeeds enabled, then the 7 incorrect alignments will be filtered out, with the 1 valid alignment being used for the final face alignment.

- Save Filtered - Rather than discarding faces that are filtered out, it is possible to output the filtered faces into their own sub-folder. This is useful for visualising what kind of images are actually being filtered out. If the face filters filter or nfilter are used (in the next section of this guide) then this option will also apply to any faces filtered out there.

Note that if 'save filtered' is enabled, these faces will still not exist within the final alignments file. It is possible to re-insert filtered out faces back into an alignments file, which is covered at end of this guide.

- re-align

If the re-align option has been selected during extraction, then these settings dictate how it will be applied.- Realign Refeeds - If enabled, and re-feed has been selected during extraction, then this will attempt to re-align every re-feed prior to averaging the final result. If disabled, then only the final averaged alignments will be re-aligned. Enabling this option will bring back the best final alignments, but will significantly slow down the extraction process. For creating training sets you will probably want to disable this option. For creating an alignments file for convert, using a high re-feed value, then if you can deal with the much longer extract time, this can be worthwhile selecting. It is worth noting that disabling this option when using high re-feed values and using re-align will likely undo most of the benefit of using re-feed.

- Filter Realign Re-aligning only brings any benefit for faces which have been correctly aligned in the first instance. Faces which have not been correctly detected/aligned will not be improved by running them through the re-aligner. Enabling this option filters out any faces that have failed the aligner-filter test from the re-align process.

- The below image shows the aligned face and landmarks for the same source with various combinations of re-align and re-feed applied:

- source: The original video put through the aligner

- no_refeed no_realign: Both re-align and re-feed disabled

- re-align: Only re-align enabled

- refeed-7: Only re-feed enabled, with a value of 7

- refeed-7_realign_once: Re-feed enabled with a value of 7. Realign Refeeds disabled

- refeed-7_realign_once: Re-feed enabled with a value of 7. Realign Refeeds enabled

Extract Setup

Now you have reviewed the possible configuration settings, it is time to extract some faces. Head over to the Extract tab in the GUI:

Data

This is where we select our input videos or images, and our output location. We can extract faces from a single source, be it a folder of images or a video file, or we can run extraction in "batch mode" which allows us to extract faces from multiple sources.- Input Dir: First up we need a source. This can be set to either a video file (video icon) or to a folder containing a series of images (picture icon).

- If you enable the Batch Mode option then this must be a folder. Within the selected folder you should place all of the video files or folder of images that you wish to extract faces from.

- Output Dir: Next we need to tell the process where to save the extracted faces to. Click the folder icon next to select an output location.

- If you enable the Batch Mode option, then sub-folders for each of the sources you have selected in the input dir will be created for extracting the faces into.

- Batch Mode: Select this option if you wish to process multiple sources (i.e. several videos and/or folders of images). If you select this option, make sure that your Input Dir is a folder that only contains sources you wish to extract faces from.

- I ignore the next option, so you probably should too, but the explanation of each of them is below:

- Alignments: This option is in case you want to specify a different name/location for the alignments file. It's a lot easier to leave this blank, and let the process save it out to the default location (which will be in the same location as the source frames/video). If you have enabled Batch Mode then this option is not supported anyway and will be ignored.

- Input Dir: First up we need a source. This can be set to either a video file (video icon) or to a folder containing a series of images (picture icon).

Plugins

The plugins are used for first detecting faces in an image, reading the faces landmarks to align the image and creating a mask using a variety of masking methods.Detector, Aligner: Select the ones you want to use.

- At the time of writing the best detector is S3FD and the best aligner is FAN

- External: As well as the built in detector/aligner plugins, alignment data can be imported into Faceswap. See this post for more information on how to import external data.

Masker: Select which masker(s) you would like to use. Any masker selected here will generate a mask in addition to the landmark based masks. Multiple masks can be generated (by selecting more than 1 masker), however be aware that all of these maskers use the GPU, so the more maskers you add, the slower the extract speed will be. The landmark based masks (Components and Extended) are automatically generated during extraction and are detailed below so that you know how they perform.

Each masker has different pros and cons. If you wish to use a Neural Network based mask for training or converting your frames then you can choose a mask here. The bisenet-fp mask is easily the best performing mask.

I tend to leave all the maskers off if I'm generating a set for conversion, as I'll want to amend a lot of the landmarks first, then generate the masks after the faces have been fixed (using the mask tool). It is worth noting that any manual edits you make for Landmarks will delete any NN generated masks for that face (You can read why here: viewtopic.php?p=3721#p3721), so you will save time my not generate an NN mask at this point.

For training sets, I will pick the mask that I intend to use for training with, as I don't tend to make landmark edits for training sets (I just delete the faces I won't be using).

Note, that you can always add more masks to an alignments file with the Mask tool.BiSeNet-FP - A relatively lightweight NN mask, ported from https://github.com/zllrunning/face-parsing.PyTorch. This offers a decent trade-off of resource requirement and accuracy. With that said, BiSeNet-FP is definitely the best performing of the NN based masks and should be your go-to choice at the moment. This mask has additional options within it's configuration settings that allow for the inclusion/exclusion of hair, ears, eyes and glasses within the mask area. There are 2 weights options:

- original - Trained on the CelebHQ-Masked data set. This is a decent model although it still struggles with some (particularly skin) obstructions.

- faceswap - Trained on manually curated posed and 'in the wild' faceswap extracted data. These weights better handle difficult conditions, obstructions and multiple faces in an extracted image. You can read more about these trained weights and see examples here: https://github.com/deepfakes/faceswap/pull/1210

Note: BiSeNet-FP is currently the only mask that is compatible with "full head" training, as the hair needs to be included within the mask area to be able to train this way.

- BiSeNet-FP with Hair and ears excluded from the mask (default settings):

- BiSeNet-FP with Hair and ears included in the mask (hair required for full head training):

Unet-DFL - A Neural Network mask designed to provide smart segmentation of mostly frontal faces. The mask model has been trained by community members.

VGG-Clear - A Neural Network mask designed to provide smart segmentation of mostly frontal faces clear of obstructions.

VGG-Obstructed - designed to provide smart segmentation of mostly frontal faces. The mask model has been specifically trained to recognize some facial obstructions (hands and eyeglasses).

The following Landmarks based masks are automatically created:

- Components - A mask generated from the 68 point landmarks found in the alignments phase. This is a multi-part mask built around the outside points of the face.

- Extended - One of the main problems with the components mask is that it only goes to the top of the eyebrows. The Extended Mask is based off the components mask, but attempts to go further up the forehead to avoid the "double eyebrow" issue, (i.e. when both the source and destination eyebrows appear in the final swap.).

Normalization: This processes the image fed into the extractor to better find faces in difficult lighting conditions. Different normalization methods work with different datasets, but I find hist to be the best all-rounder. This will slightly slow extraction, but it can lead to better alignments:

Re Feed: This option will re-feed slightly adjusted detected faces back into the aligner, then average the final result. This helps reduce "micro-jitter". For training sets, I will leave it at zero. For generating alignments for convert I will put this on. The higher the number of re-feeds, the better, but each increment slows down the extraction speed. Set this is high as you feel you have the patience for, but anything above 9 is probably not worth it.

The below image shows re-feed set to 8 (left) vs re-feed set to 0.:

Identity: In a later step you will be sorting faces. Enabling this option allows you to collect the 'identity encoding' for each of the faces found during this extraction. This will save time during the sorting phase by collecting the identity information now, rather than when running the sort-tool. It is up to you whether you enable this now or not, but I tend to enable this option.

Re Align Attempt to improve the alignments by re-feeding the initially aligned face back through the aligner. This option can bring back better alignments at a speed cost. It will only help with faces that are rotated (EG: faces that appear upside down in the original frame) or faces at extreme angles (EG: profiles). This option will not improve the alignments of any faces that are completely mis-aligned (ie those not detected properly in the first place) but can improve the alignments of faces which have been aligned and detected correctly, but the alignments are a little bit off. This option has configurable settings configurable settings to dictate how it is executed.

- The below image shows the aligned face and landmarks for the same source with various combinations of re-align and re-feed applied:

- source: The original video put through the aligner

- no_refeed no_realign: Both re-align and re-feed disabled

- re-align: Only re-align enabled

- refeed-7: Only re-feed enabled, with a value of 7

- refeed-7_realign_once: Re-feed enabled with a value of 7. Realign Refeeds configutation option disabled

- refeed-7_realign_once: Re-feed enabled with a value of 7. Realign Refeeds configutation option enabled

- The below image shows the aligned face and landmarks for the same source with various combinations of re-align and re-feed applied:

The next few options can also be ignored. Again, I will detail what they do so you can make your own call:

- Rotate Images: This is entirely unnecessary with all current detectors except for maybe CV2-DNN. The current detectors are perfectly capable of finding faces at any orientation, so this will just slow down your process for little or no gain.

Face Processing

Options for face filtering and selection are defined here.Min-size: I normally set this at a fairly low value, but above zero, to filter out anything that is clearly too small to be a face. It will, however, depend on the size of your input frames, and then the size of the faces within your frames:

Filters: Face filters allow you to filter out faces that are not your target face. For both the filter and nfilter you can specify either a folder containing faces you wish to filter out (right hand button) or select multiple files (left hand button). Both of the filters work in the same way.

The filters work best when using multiple images of each identity, at a wide variety of expressions, angles and lighting conditions. I find that between 8-16 images of each identity works fairly well.

For each face that is found during the detection process, it is checked against every face that you have provided to the filter. If any of the faces match above the given threshold then the filter will consider this a match.

The filters can take either full-size images/frames containing the target, in which case face(s) in the image will be detected and aligned, or it can take already extracted Faceswap face images. I highly recommend using Faceswap extracted images as you can then be certain that the correct face is identified and is correctly aligned.- Filter: These are identities that you wish to be extracted from the source video. Multiple identies can be included here (ie. you can included images of several people to ensure that they are all extracted). If you pass in a frame (rather than a Faceswap extracted face) to the filter and multiple faces are detected in the frame, then none of the identities found in the image will be used for filtering, as the process will not know which identity you are interested in.

- nFilter: These are identities that you wish not to be extracted from the source video. Again, multiple identities can be used. If you pass in a frame (rather than a Faceswap extracted face) and multiple faces are detected in the image, then all detected faces will be used for filtering.

- Ref Threshold: Choosing the correct value to use here can be tricky, as it will depend on what you plan to use these faces for (converting or training), the number and variety of faces used to feed the filter and whether you are using the filter to include ('filter') faces or exclude ('nfilter') faces. The below examples assume that 'filter' is being used. If nfilter is being used, then the threshold can probably be bumped a bit higher.

- For training, you will probably be less concerned if it filters out faces that you are interested in, so a value of around 0.65 - 0.75 will eliminate most false positives (assuming a decent variety of faces have been fed to the filter)

- For converting, it is far easier to remove false positives (ie: faces which you are not interested in keeping, but have been extracted) from the extract output than it is to re-insert false negatives (ie: faces you are interested in keeping, but have been filtered out) back into the alignments file. With this in mind, I find that a value of 0.425 - 0.5 does a fairly good job of limiting false negatives, whilst also filtering out a significant number of faces that you are not interested in, assuming a decent selection of faces have been passed to the filter.

Output

Options for the output processing of faces.Size: This is the size of the image holding the extracted face. Specifically, this is the size of the full head extracted image. When training a 'sub-crop' of this image will be used depending on the centering you have chosen; either 'Face' (the green box) or 'Legacy' (the yellow box):

- If you are extracting for sorting a dataset/cleaning an alignments file etc. then it can make sense to output these at a smaller size (say 128px). This smaller file size will allow any previews to load faster when going through the images in a file manager, which can save quite some time.

- If you are extracting for a training set, then in most cases the default 512px will be fine. This would give a face sub-crop size of 384px and a 'legacy' sub-crop size of 308px. If you are training higher resolution models then you may want to up the size here. You can calculate the required extract size by looking at the output size of the model you want to train. Assuming you intend to train with face coverage, then you would want to multiply the model output size by 1.333 (and round up a little to an even number). So for a 448px model, you would need an extract size of 448 * 1.333 = 597.18 or 598px. If you intend to train with 'legacy' centering then the multiplier would be 1.666.

Extract Every N: This value that you set this option at will change depending on the purpose of this extraction.

- If you are extracting for convert, or you are extracting for convert AND will be using some of the faces for training, then leave this on 1 (i.e. extract from every frame)

- If you are extracting for training only, then set a value that seems sane. This will depend on the number of frames per second your input is. However, for a 25fps video, sane values are between about 12 - 25 (i.e. every half second to a second). Anything less than that and you will probably end up with too many similar faces. It is worth bearing in mind how many sources you are extracting from for your training set, and how many faces you want to have in your final training set, as well as how long your source is. These will all vary on a case by case basis.

These next few options are generally fine at default settings, however explanations below:

- Save interval: I don't bother with, but it's up to you.

- Debug Landmarks: Draws landmarks on the extracted face. Only useful for debugging purposes.

Run

We're now ready to review our options and run Extraction.You should end up with a screen similar to below:

Hit Extract and wait for your faces to output and your alignments file to be generated.

Sorting

Now that we have extracted our faces, we need to clean up the dataset and the alignments file. The Extractor does a good job of getting faces, but it isn't perfect. It will have got some false positives, it will have failed to align some faces and it will have also extracted people who we don't want to swap. Most likely if you go to your faces folder you will have large sections of the output which look something like this:

Cleaning that up doesn't look like it will be fun! Fortunately we can make this easier. The quickest and easiest way to clean our dataset is to sort the faces into a meaningful order and then delete all the faces we don't want. There are several sorting and grouping methods available. Generally, sorting by face works best, but it can be sometimes useful to sort/group by some of the quicker methods first (like size or distance), to get rid of some of the easier to target false-positives.

Sorting by face can be useful when put into separate bins. The clustering process does a fairly decent job of grouping the same faces together in the same bins. You can group by faces and sort within each group of faces with another metric (such as yaw or distance), however, I find that it generally works best if you both sort and group by face.

NB: Sorting by face is RAM intensive. It has to do a LOT of calculations. I have tested successfully sorting 22k faces on 8GB of RAM. If you have to sort more faces than can be held in RAM, then the process will automatically switch to a much slower method, so if you are RAM limited, then you may want to split your dataset into smaller subsets. Theoretically, the amount of RAM required is (n2 * 24) / 1.8 where n is the number of images. You will need to take any other RAM overhead in to account too (i.e. other programs running, the images loaded to RAM), but theoretically it means 30,000 images will take (300002 * 24) / 1.8 = 12,000,000,000 bytes or about 11GB.

Head over to the Tools tab in the GUI and then to the Sort sub-tab:

Data

This is where we select the Faceset that we extracted in the previous step for sorting.- Input: Enter the folder which contains your extracted faces from the previous step.

- Output: This can be empty, or you can specify an output location. I prefer to sort in place, so leave it empty

- Batch Mode:. It is possible to process multiple folders of faces at a time by enabling this option. If Batch Mode is selected, then the

Inputshould contain several sub-folders of faces to sort. Each subfolder will be processed as separate sort jobs (as if you had run the sort command multiple times on multiple folders). - Keep: It's quicker to leave this disabled and allow the source files to be renamed. If you are worried about losing data, you can select this option and the files will be copied to the new location/filename rather than being moved, with the original files kept in their original location.

Sort Settings

How we want to sort the faces. Sorting orders the images in a folder by changing their filename so that they can be sorted in a file manager.The below instructions are for sorting by face. There are plenty of other ways to sort and group the data, but the basic steps to follow are the same.

- Sort By: Set this to Face. You can also set it to None (to keep the original filenames) and just rely on the grouping in the next step, or sort by another metric. I find that both sorting and grouping by face leads to the easier folders to clean up.

Group Settings

How we want to group the faces and related settings. Grouping places all images, within a certain threshold, into separate folders (or 'bins').- Group By: Set this to Face. This will attempt to group similar faces together in separate folders

- Threshold: Generally leaving this at default (-1.0) is fine.

- Bins: For grouping by face, this option does not do anything. It is used for other grouping methods though, so check the tooltips.

Output

How we want our sorted faces to be output.- This section is entirely deprecated and will be removed in a future update. There is no need to set anything here

Run

Leave all other options at default. We're now ready to review our options and run Sort.- You should end up with a screen similar to below:

- Hit Sort to start the sorting process.

The process will start reading through the faces building up an identity for each. It will then cluster the faces together by similarity. The actual clustering process can take a long time as it has to compute a lot of data. Unfortunately there is no visual feedback as to it's progress, so please be patient.

Once complete you should find 99% of the faces sorted together:

And all the junk sorted together as well:

Now just go through each of the bins (sub-folders), deleting those faces/folders you do not want to keep, and moving any faces you do want to keep back into the parent folder.

Clean the Alignments File

Now we have removed all of the faces we don't want and just have a set that we do, we need to clean the alignments file. Why? Because all the information about the unwanted faces is still in the file, and this is likely to cause us problems in future. Cleaning the alignments file with the integrated tool also has the added advantage of renaming our faces back to their original filename, so it's a win-win.

Navigate to the Tools tab then the Alignments sub-tab:

Processing

This section allows us to choose what action we wish to perform, as well as setting any output processing. We're only interested in the Job section.- Job: This is a list of all of the different alignments jobs that are available in the tool.

- Select remove-faces

- Output: Ignore this section as the remove-faces job does not generate any output,

- Job: This is a list of all of the different alignments jobs that are available in the tool.

Data

The location of the resources we want to process.- Alignments File: select the alignments file that was generated during the extraction process It will be located in the same folder as the video you extracted from, or within the folder of the images you extracted from.

- Faces Folder: select the faces folder that you outputted faces into as part of the extraction process (the same folder as you used for sort).

- Leave the other options blank in this section as they are not required.

Run

Leave all other options at default. We're now ready to review our options and clean the alignments file- You should end up with a screen similar to below:

- Hit Alignments and let the process complete.

Once done your faces will have been renamed back to their default names, and all of the unwanted faces will have been removed from your alignments file.

The process will have backed up your old alignments file and put it next to your newly created file in it's original location. It will have the same name as your cleansed alignments file but with "backup_<timestamp>" appended on to the end of it. If you are happy that your new alignments file is correct, you can safely remove this backup file.

At this point, if you are extracting for convert (or the set is going to be used for convert and training) then you can entirely delete your faces folder. None of these faces are required any more. If you ever need to re-generate your face set, then this can be done with the Alignments Tool (extract job).

Manually Fix Alignments

Ok, we've extracted our faces, we've cleaned out all the trash, surely we're done now? Well hold on there fella. Sure, you could move on now, but do you want an ok swap or do you want a great swap?

Manual is useful for the following tasks:

- Removing any left over "multiple faces" in a frame

- Adding any alignments to those frames that are missing them

- Fixing misaligned frames

- Manually editing any masks you wish to use

Depending on what we are extracting our dataset for will dictate what we want to do here. If we are purely extracting for a training set, we could feasibly skip this step altogether, although it is a good idea to review the existing alignments to make sure any masks get built correctly.

If extracting for convert, then at the absolute minimum we want to fix any multiple faces in frames, and any frames missing alignments. This alone will improve the final swap. Depending on how thorough you want to be, you can then fix any poor alignments

I won't go into full details on how to use the Manual Tool. That is a guide in itself, but should be quite intuitive, with tooltips for all of the available options.

Launch the Manual Tool

To launch the Manual tool first navigate to the Tools tab then the Manual sub-tab:

Data

This is where we specify the alignments file and video file/frames folder we wish to work with.- Alignments: select the alignments file that was generated during the extraction process It will be located in the same folder as the video you extracted from, or within the folder of the images you extracted from.

- Frames: select the video or the frames folder that you used as an input for the extraction process

Options

This are optional actions that can be run. You won't generally need to change these, but I'll list what they do in case you do need to utilize them.- Regen Thumbs: The manual tool stores a cache of thumbnails for populating the Faces Viewer part of the tool. If you have used the old manual tool with your alignments file, then it is possible that the thumbs may have gone out of sync with the actual extracted faces. This option regenerates the thumbnails so that they are correct with the data that exists within the alignments file.

- Single Process: In order to speed up the generation of the thumbnail cache, the process launches several threads to read the images from the video file. On some videos this causes the process to hang. If this happens to you, then select this option to extract the thumbnail in a single thread.

Run

Leave all other options at default. We're now ready to review our options and launch the manual tool.- You should end up with a screen similar to below:

- Hit the Manual button to launch the manual tool.

- NB: It may take a minute or two for the Manual Tool to launch, as it relies on your GPU, so needs to initialize the aligners/maskers that it will use.

Manually Fixing for Converting

For fixing for convert we want to do the following:

Multi-Faces

- Change the filter mode to Multiple Faces in the filter pull down located between the Frames and Faces Viewer

- You can right click faces in the Faces Viewer to delete faces you wish to delete

- Alternatively, you can change the Editor to Bounding Box or Extract Box on the left-hand button bar and right click faces you want to delete from the Frame Viewer.

- Use the transport buttons or "Z" and "X" to navigate to the previous or next frame with multiple faces.

- Press "Ctrl+S" to save out your amended alignments file, or press the save icon located between the Frames and Faces Viewer.

Frames without faces

- Change the filter mode to No Faces in the filter pull down located between the Frames and Faces Viewer

- Change the edit mode to Bounding Box editor using the left-hand button bar

- Use the transport buttons or "Z" and "X" to navigate to the previous or next frame without faces.

- If you hit a frame where no face was detected but should have been, you can either click on the face and adjust the bounding box with the mouse, or you can copy the previous/next frame's alignments using the copy buttons on the left-hand side or using the "C" or "V" keys and edit those.

- Repeat until your reach the last frame

- Press "Ctrl+S" to save out your amended alignments file, or press the save icon located between the Frames and Faces Viewer.

Fixing alignments

- Change the filter mode to Has Faces in the filter pull down located between the Frames and Faces Viewer

- You can navigate through the frames with the transport controls, or click on a face in the Faces Viewer to jump straight to any frames that need attention.

- If the alignments look incorrect, use the appropriate editor from the left-hand button bar to fix them up

- Repeat until you reach the last frame

- Press "Ctrl+S" to save out your amended alignments file, or press the save icon located between the Frames and Faces Viewer.

A backup of your alignments file will have been created each time you saved during manual adjustment. If you are happy that your new alignments file is correct, you can delete these backups. They will be located in the same folder as the original alignments file.

Manually Fixing for a Training Set

For fixing for training we want to do the following:

- Fixing alignments

- Change the filter mode to Has Faces in the filter pull down located between the Frames and Faces Viewer

- You can navigate through the frames with the transport controls, or click on a face in the Faces Viewer to jump straight to any frames that need attention.

- If the alignments look incorrect, use the appropriate editor from the left-hand button bar to fix them up

- Repeat until you reach the last frame

- Press "Ctrl+S" to save out your amended alignments file, or press the save icon located between the Frames and Faces Viewer.

A backup of your alignments file will have been created each time you saved during manual adjustment. If you are happy that your new alignments file is correct, you can delete these backups. They will be located in the same folder as the original alignments file.

Finally

If you have edited any alignments, then you will need to regenerate any NN masks for those faces. This is because when you edit landmarks, the alignment of the face is changed so the NN mask becomes invalid, so the process deletes them. You can read more about why this happens here: viewtopic.php?p=3721#p3721), Masks can be regenerated using the Mask tool.

Once the alignments are fixed, you will need to regenerate your faceset. This is because the face image will have changed due to moving the landmarks, so the training process will not be able to find the faces you amended.

Firstly delete your output faces folder. You don't want this around any more.

You can then hit the Folder button located next to the save icon to extract faces straight from the alignments file.

An alternative method is listed below, but if you have successfully extracted from the Manual Tool, you can jump straight to Extracting a training set from an alignments file

Navigate to the Tools tab then the Alignments sub-tab:

Processing

This section allows us to choose what action we wish to perform, as well as setting any output processing. We're only interested in the Job section.- Job: This is a list of all of the different alignments jobs that are available in the tool.

- Select Extract job.

- Output: Ignore this section as the extract job does not generate any output,

- Job: This is a list of all of the different alignments jobs that are available in the tool.

Data

The location of the resources we want to process.- Alignments File: select the alignments file that was generated when after cleaning in the last step. It will be located in the same folder as the video you extracted from, or within the folder of the images you extracted from.

- Faces Folder: select an empty folder where you want the outputted faces to go.

- Frames Folder: select the video or the frames folder that you used as an input for the extraction process

- Leave any other options in this section blank as they are not required for this step.

Run

Leave all other options at default. We're now ready to review our options and launch the alignments tool.- You should end up with a screen similar to below:

- Hit Alignments button to re-extract your faces.

Extracting a training set from an alignments file

Now you've cleaned up your alignments file, you may want to pull some of these faces out to use for a traning set. This is a simple task

Navigate to the Tools tab then the Alignments sub-tab:

Processing

This section allows us to choose what action we wish to perform, as well as setting any output processing. We're only interested in the Job section.- Job: This is a list of all of the different alignments jobs that are available in the tool.

- Select Extract job.

- Output: Ignore this section as the extract job does not generate any output,

- Job: This is a list of all of the different alignments jobs that are available in the tool.

Data

The location of the resources we want to process.- Alignments File: select the alignments file that was generated when after cleaning in the last step. It will be located in the same folder as the video you extracted from, or within the folder of the images you extracted from.

- Faces Folder: select an empty folder where you want the outputted faces to go.

- Frames Folder: select the video or the frames folder that you used as an input for the extraction process

- Leave any other options in this section blank as they are not required for this step.

Extract

These are options for extracting faces from an alignments file- Extract Every N: This will depend on the number of frames per second your input is. However, for a 25fps video, sane values are between about 12 - 25 (i.e. every half second to a second). Anything less than that and you will probably end up with too many similar faces. It is worth bearing in mind how many sources you are extracting from for your training set, and how many faces you want to have in your final training set, as well as how long your source is. These will all vary on a case by case basis.

- Size: This is the size of the image holding the extracted face. Currently no models support above 256px so leave this at default

- Large: Enabling this option will only extract faces that have not been up-scaled to the output size. For example, if the extract size is set to 512px and a face is found in a frame is 480px, it will not be extracted. If it is 520px it will.

Run

Leave all other options at default. We're now ready to review our options and extract our training set from our cleansed alignments file to our selected folder.- You should end up with a screen similar to below:

- Hit Alignments button to extract the faces.

Repeat this process for as many sources as you wish to generate training sets for.

Once complete, you can place all of the facesets into the same folder. Your training set is ready.

Re-inserting filtered out faces into an alignments file

This section covers off how to re-insert filtered out faces into an alignments file, if save-filtered has been enabled in the Extract Settings section.

As stated previously, it is easier to remove false-positives from the extraction output than it is to re-insert false-negatives back into an alignments file, but sometimes you will find that you need to do this. If this applies to you then follow these steps.

- Firstly delete/backup your original alignments file. You won't need it anymore because we will be creating a new alignments file from the faces you want to keep.

- Place all the faces that you want to keep into the same folder. That is, the faces that you have curated from the extraction output in addition to any faces that you have chosen to rescue from the sub-folders containing the filtered output.

- Open up the alignments tool by navigating to the Tools tab then the Alignments sub-tab:

- Under Processing select the From-Faces job

- Under Data press the button next to Faces Folder navigate to the path of the folder containing the faces you want to keep created in the previous step.

- These are the only options you need to update. The settings should look something like this:

- Hit the Alignments button.

- A new alignments file will be generated and placed in your faces folder. Move this alignments file back to where your original alignments file was to replace it.